Back to blog

Proxies for Web Scraping Data: Boost Data Collection with Best Practices

Stella Proxies

Jul 17, 2024

Share on:

Data scraping, also known as web scraping, is a methodology used to extract data from websites and other sources. Scraping technologies are beneficial in collecting the necessary information for businesses and their research tasks.

However, due to changes in IP blacklisting, accessing webpages can become difficult if you send numerous requests using your IP address.

To avoid this issue, it is important to use proxies when conducting web scraping activities; this not only enables one to collect data without restrictions quickly but also ensures that the extraction process is secure and done with best practices!

In this blog post, we discuss how using proxies can help make your data collection easier by using some of the most effective proxy-related tools available out there.

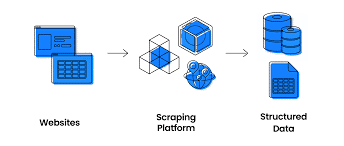

What is Web Scraping?

Web scraping is a method of extracting data from websites. With the use of web scraping tools, businesses can collect large amounts of information in an automated manner without having to enter each and every piece of data manually.

This helps to save time and money for companies who would otherwise have to pay staff or outsource their data collection.

How Web Scraping Works?

The process of web scraping involves the use of software programs to extract specific data from websites. The software used for this purpose can have many features like automatic scheduling, filtering, and extraction of structured data from HTML documents.

Both humans and automated robots are commonly used for web scraping tasks; when designing an automated scraper, one must consider the best practices in order to ensure that the scraping process is done efficiently and safely.

How do Websites Detect and Block IP Addresses?

Websites use certain techniques to detect suspicious activity from IP addresses and can block them if they determine that too many requests are sent in a short amount of time.

Websites may employ anti-scraping tools such as CAPTCHAs or bot detection measures in order to ensure that only legitimate visitors have access to their content.

3 Benefits of Using Proxies in Web Scraping

1. Increased Anonymity and Security

By using proxies, you can make sure that each data request sent out is routed through a different IP address.

This helps to keep your activities anonymous and secure and prevents websites from blocking or banning your IP address.

2. Access to Multiple IP Addresses

Using proxies gives you access to multiple IP addresses and therefore makes it easier for you to bypass geo-location restrictions or content filters.

This allows you to extract data from websites that may otherwise remain blocked due to certain restrictions.

3. Improved Scraping Speed and Success Rates

Using proxies can help to improve the speed and success rates of web scraping projects by providing multiple IP addresses to send out requests.

This helps to ensure that data is collected more quickly and efficiently, thus improving the overall productivity of the web scraping process.

Which are the Right Proxies for Web Scraping?

When selecting proxies for web scraping purposes, it is important to ensure that the proxies are suitable for your specific needs.

The best types of proxies for web scraping are residential and datacenter IPs, as these can provide a high level of anonymity and security.

We recommend Stella proxies, as they offer a wide range of residential and datacenter proxies that can be used for web scraping tasks.

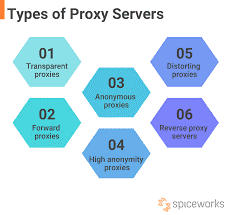

Types of Proxies Available

There are a few different types of proxies that can be used for web scraping. These include residential proxies, datacenter proxies, backconnect/rotating proxies, and dedicated/static IPs.

Residential and datacenter proxies provide high levels of anonymity and security, while backconnect/rotating proxies are best for those who require increased efficiency and speed.

Dedicated/static IPs are best for those who need a consistent IP address with no changes in the incoming traffic.

Factors to Consider when Choosing Proxies

When choosing proxies for web scraping, there are a few important factors to consider.

These include the type of proxy needed (residential, datacenter, backconnect/rotating, dedicated/static), the speed and reliability of the proxy server, the amount of bandwidth available on the proxies, and whether or not they are compatible with your web scraping tool.

It is important to ensure that the proxies are reliable and secure in order to protect your IP address and maintain a successful data extraction project.

How to Test Proxies for Web Scraping?

Before using proxies for web scraping, it is important to test them in order to ensure that they are working properly. This can be done by visiting a few websites and checking the response time of each request.

Additionally, you can use proxy testers or IP checkers in order to verify the IP address being used and make sure that it is not blocked or blacklisted.

Lastly, you can also use tools such as Webhose to monitor your proxies and verify that they are working correctly.

4 Best Practices for Using Proxies in Web Scraping

1. Rotating IP Addresses

In order to maintain a successful web scraping project, it is important to rotate IP addresses. This helps to keep your activities anonymous and prevents websites from blocking or banning your IP address.

2. Setting User-Agent Headers

Another important best practice for using proxies in web scraping is to set user-agent headers. This helps to disguise your activities further and make it more difficult for websites to detect that you are accessing their data.

3. Avoiding Suspicious Activity

In order to ensure that your proxies are not blocked or banned, it is important to avoid any suspicious activity.

This includes sending too many requests in a short period of time, using the same IP address for too long, and accessing websites that have been blacklisted by search engines.

4. Monitoring Proxy Performance

Finally, it is important to monitor the performance of your proxies in order to ensure that they are working correctly.

This can be done by using tools such as Webhose or proxy testers to check response times and verify that IP addresses are not blocked or blacklisted.

What are Legal and Ethical Considerations for Web Scraping?

Web scraping can be a powerful tool for gathering data, but it is important to ensure that you are following the laws and ethical guidelines set by various countries and organizations.

In particular, it is essential to respect copyrights and privacy regulations, as well as obtain permission from the owners of any websites or databases being accessed.

To ensure that data is collected responsibly and not used for malicious purposes. Finally, it is important to be transparent with the information being collected and provide proper credit to any websites or databases accessed.

Overview of Relevant Laws and Regulations

Web scraping falls under the purview of laws and regulations related to copyright, data protection, intellectual property, and privacy.

In particular, it is important to be aware of GDPR in the European Union, as well as the Computer Fraud and Abuse Act (CFAA) in the United States.

Additionally, there are also various state laws that govern the use of web scraping and data collection.

Ethical Guidelines for Responsible Web Scraping

In order to ensure that your web scraping activities are ethical and responsible, it is important to follow a few guidelines.

First, you must obtain permission from the owners of any websites or databases being accessed. Second, data should only be collected for legitimate purposes and not used for malicious activities.

Third, information should be transparently collected and properly credited to the source. Finally, any data collected should be used responsibly and not shared with third-parties without consent.

Conclusion

At the end of the day, proxies for web scraping data can boost your data collection efforts and make them more efficient.

By executing best practices, such as regularly refreshing the IP address pool to make sure that web crawlers are unblocked from different sites and fending off malicious bots from stealing resources or causing performance issues.

You will be able to maximize the use of proxy servers to garner more accurate and timely results and trust me you don’t want to miss the fabulous services offered by Stella Proxies, sign up today and get amazing offers before they run out.

No credit card required

More articles by us

Tutorials

What Are Residential Proxies and How Do They Work?

Proxies

Jan 26, 2025

Best Proxies for Nvidia RTX 5090, 5080, and 5070 Ti Launch Day Drops

Proxies

Jul 17, 2024

Proxies for Web Scraping Data: Boost Data Collection with Best Practices

Proxies

Jul 17, 2024